The 2024 Nobel Prize in physics has been awarded to John Hopfield and Geoffrey Hinton for foundational discoveries and inventions that enable machine learning with artificial neural networks.

John Hopfield created an associative memory that can store and reconstruct images and other types of patterns in data.

Geoffrey Hinton invented a method that can autonomously find properties in data, and so perform tasks such as identifying specific elements in pictures.

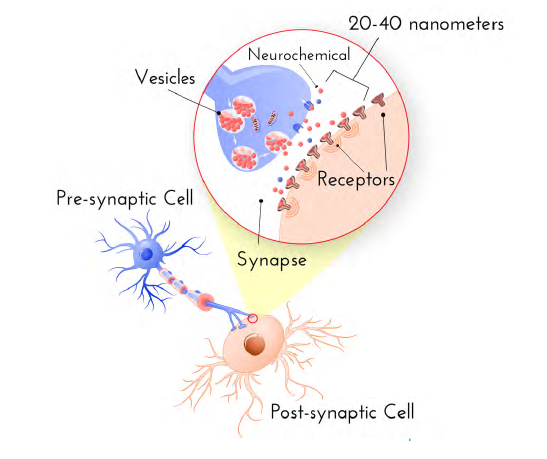

Synapse is the site of transmission of electric nerve impulses between two nerve cells (neurons) or between a neuron and a gland or muscle cell (effector).

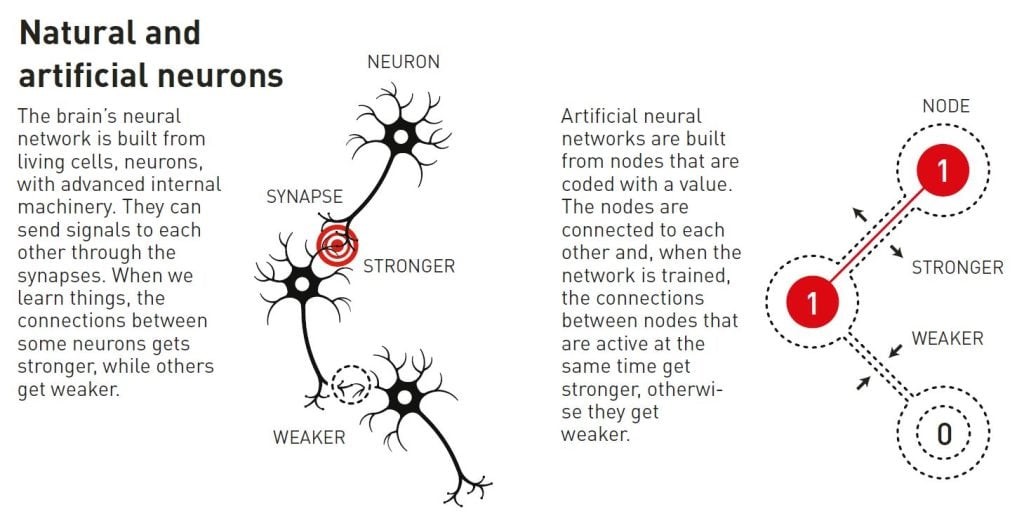

Hebbian learning is an idea in neuropsychology that if one neuron repeatedly triggers a second, the connection between the two becomes stronger.

|

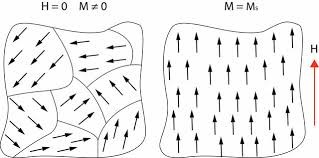

Atomic Spin |

|

Donald Hebb’s hypothesis is about how learning occurs because connections between neurons are reinforced when they work together.